Day 01: Exploring data with pandas#

Learning goals#

Today, we will make sure that everyone learns how they can use pandas, matplotlib, and seaborn.

After working through these ideas, you should be able to:

know where to find the documentation for

pandas,matplotlib, andseabornload a dataset with

pandasand explore itvisualize a dataset with

matplotlibandseaborndo some basic data analysis

Notebook instructions#

We will work through the notebook making sure to write all necessary code and answer any questions. We will start together with the most commonly performed tasks. Then you will work on the analyses, posed as research questions, in groups.

Outline:#

Useful imports (make sure to execute this cell!)#

Let’s get a few of our imports out of the way. If you find others you need to add, consider coming back and putting them here.

# necessary imports for this notebook

%matplotlib inline

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

sns.set_style('ticks') # setting style

sns.set_context('talk') # setting context

sns.set_palette('colorblind') # setting palette

Libraries#

We will be using the following libraries to get started:

numpyfor numerical operations.

The

numpylibrary is a fundamental package for scientific computing in Python. It provides support for large, multi-dimensional arrays and matrices, along with a collection of mathematical functions to operate on these arrays. You can find the documentation here.

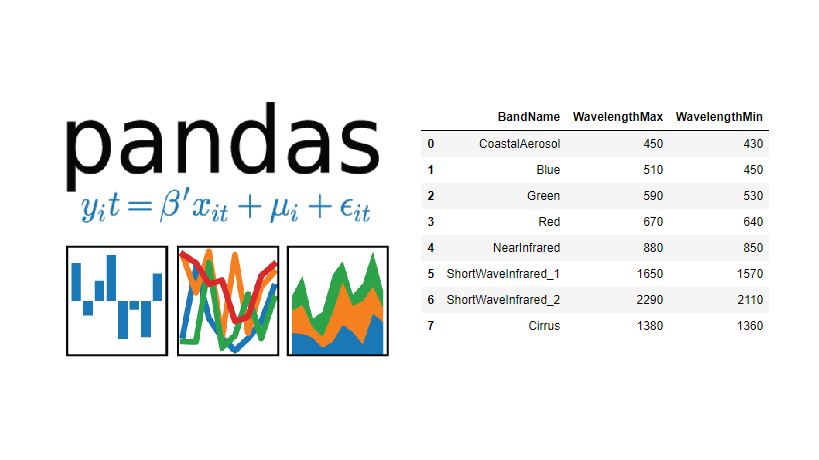

pandasfor data manipulation.

The

pandaslibrary is a powerful tool for data analysis and manipulation in Python. It provides data structures like DataFrames and Series, which make it easy to work with structured data. It is quickly becoming the standard for data analysis in Python. You can find the documentation here.

matplotlibfor data visualization.

The

matplotliblibrary is a widely used library for creating static, animated, and interactive visualizations in Python. It provides a flexible framework for creating a wide range of plots and charts. You can find the documentation here.

seabornfor statistical data visualization.

The

seabornlibrary is built on top ofmatplotliband provides a high-level interface for drawing attractive and informative statistical graphics. It simplifies the process of creating complex visualizations. You can find the documentation here.

NOTE: You should read through documentation for these libraries as you go along. The documentation is a great resource for learning how to use these libraries effectively. And the documentation is written the same way for almost all Python libraries, so it is a good skill to develop as you learn to use Python for science.

1. Stellar Classification Dataset - SDSS17#

The Stellar Classification Dataset is a collection of observations of stars from the Sloan Digital Sky Survey (SDSS). The dataset contains various features of stars, such as their brightness, color, and spectral type, which can be used to classify them into different categories. The data set has 100,000 observations of starts, with 17 features and 1 class column. The features include:

Feature |

Description |

|---|---|

obj_ID |

Object Identifier, the unique value that identifies the object in the image catalog used by the CAS |

alpha |

Right Ascension angle (at J2000 epoch) |

delta |

Declination angle (at J2000 epoch) |

u |

Ultraviolet filter in the photometric system |

g |

Green filter in the photometric system |

r |

Red filter in the photometric system |

i |

Near Infrared filter in the photometric system |

z |

Infrared filter in the photometric system |

run_ID |

Run Number used to identify the specific scan |

rereun_ID |

Rerun Number to specify how the image was processed |

cam_col |

Camera column to identify the scanline within the run |

field_ID |

Field number to identify each field |

spec_obj_ID |

Unique ID used for optical spectroscopic objects (this means that 2 different observations with the same spec_obj_ID must share the output class) |

redshift |

Redshift value based on the increase in wavelength |

plate |

Plate ID, identifies each plate in SDSS |

MJD |

Modified Julian Date, used to indicate when a given piece of SDSS data was taken |

fiber_ID |

Fiber ID that identifies the fiber that pointed the light at the focal plane in each observation |

And the class column is:

Feature |

Description |

|---|---|

class |

object class (galaxy, star or quasar object) |

Some of the features are values representing the brightness of the star in different filters, while others are positional coordinates in the sky. The class column indicates whether the object is a galaxy, star, or quasar.

For this exercise, we will use the Stellar Classification Dataset to explore how to load and visualize data using pandas, matplotlib, and seaborn. Later we will use this dataset for some classification and regression tasks.

2. Loading and exploring a dataset#

The goal is typically to read some sort of preexisting data into a DataFrame so we can work with it.

Pandas is pretty flexible about reading in data and can read in a variety of formats. However, it sometimes needs a little help. We are going to read in a CSV file, which is a common format for data.

The Stellar Classification dataset is one that has a particularly nice set of features and is of a manageable size such that it can be used as a good dataset for learning new data analysis techiques or testing out code. This allows one to focus on the code and data science methods without getting too caught up in wrangling the data. However, data wrangling is an authentic part of doing any sort of meaningful data analysis as data more often messier than not.

Although you will be working with this dataset today, you may still have to do a bit of wrangling along the way.

2.1 Reading in the data#

Download the data set from Kaggle and save it in the same directory as this notebook, or you can use the following code to load it if you cloned the repository.

NOTE: We need to check this data for missing values or other issues before we can use it.

✅ Tasks:#

Using

read_csv()to load the dataUsing

head()to look at the first few rows

Note: For this data set, read_csv() will automatically detect the delimiter as a comma, so we don’t need to specify it. If you were reading in a file with a different delimiter, you would need to specify it using the sep parameter. Moreover, if the data had headers that were not the first row, you would need to specify the header parameter.

### your code here

2.2 Checking the data#

One of the first things we want to do is check the data for missing values or other issues. The pandas library provides several methods to help us with this. We will start by using info(), which provides a concise summary of the DataFrame, including the number of non-null values in each column and the data types.

This is a good first step to understand the structure of the data and identify any potential issues, such as missing values or incorrect data types. Moreover, it will tell you how your data was imported, and if there are any columns that were not imported correctly.

✅ Tasks:#

Using

info()to check the data types and missing values

What do you notice about the data? Are there any issue with the import?

### your code here

Next, we will use describe() to get a summary of the data. This will give us some basic statistics about the numerical columns in the DataFrame, such as the mean, standard deviation, minimum, and maximum values. This is useful for understanding the distribution of the data and identifying any potential outliers.

Researchers sometimes use aberrant values to identify potential issues with the data, such as errors in data entry or measurement. Sometimes, these values are read in as NaN (not a number) or inf (infinity), which can cause issues with analysis. But, other times, the researcher might force a particular value to be a certain number, such as 0 or 99, to indicate that the value is missing or not applicable.

✅ Tasks:#

Using

describe()to get a summary of the data

What do you notice about the data? Are there any issue with starting the analysis?

### your code here

2.3 Slicing the data#

You likely noticed there were some problematic values in the data; some of the photometric values are negative, which is not possible. We will need to remove these rows from the DataFrame before we can do any analysis. However, we can also use this as an opportunity to practice slicing the DataFrame. So we will also reduce the DataFrame to only the columns we are interested in.

Slicing a DataFrame is a common operation in pandas and allows you to select specific rows and columns based on certain conditions. You can use boolean indexing to filter the DataFrame based on conditions, such as selecting rows where a certain column is greater than a certain value. Here’s some common examples of slicing a DataFrame:

# Select all rows where the 'u' column is greater than 0

df_u_positive = df[df['u'] > 0]

# Select specific columns

df_selected_columns = df[['obj_ID', 'class', 'u', 'g', 'r', 'i', 'z']]

# Select rows where the 'class' column is 'star'

df_stars = df[df['class'] == 'star']

✅ Tasks:#

Reduce the DataFrame to only the columns we are interested in (

obj_ID,class,u,g,r,i,z, andredshift) - that is the object ID, class, and the photometric values in the different filters, as well as the redshift value.Remove rows where any of the photometric values are negative (i.e.,

u,g,r,i,z).Store the result in a new DataFrame called

df_stellar.Use

describe()to check the new DataFrame.

### your code here

3. Visualizing your data#

Now that we have a clean DataFrame, we can start visualizing the data. Visualization is an important part of data analysis, as it allows us to see patterns and relationships in the data that may not be immediately obvious from the raw data. There a many ways to visualize data in pandas, but we will focus on two libraries: matplotlib and seaborn. These are the most commonly used libraries for data visualization in Python, and they provide a wide range of plotting options.

3.1 Using matplotlib#

matplotlib is a powerful library for creating static, animated, and interactive visualizations in Python. It provides a flexible framework for creating a wide range of plots and charts. The most common way to use matplotlib is to create a figure and then add one or more axes to the figure. You can then plot data on the axes using various plotting functions.

Here’s a simple example of how to create a scatter plot using matplotlib for a DataFrame df with columns x and y:

import matplotlib.pyplot as plt

# Create a figure and axes

fig, ax = plt.subplots()

# Plot data on the axes

ax.plot(df['x'], df['y'], 'o')

# Set the x and y axis labels

ax.set_xlabel('x')

ax.set_ylabel('y')

# Set the title of the plot

ax.set_title('x vs y')

# Show the plot

plt.show()

✅ Tasks:#

Create a scatter plot of

uvsgusingmatplotlib.Label the x and y axes and give the plot a title.

### your code here

Now that you have the hang of it, let’s try a few more plots.

✅ Tasks:#

Create a series of scatter plots of

uvsg,uvsr,uvsi, anduvszin a single figure usingmatplotlib.

### your code here

Ok, this is great, but we should notice that there’s three classes of objects in the data: galaxy, star, and quasar. We can use this information to color the points in the scatter plot based on their class. You can do this by using the c parameter in the plot() function to specify the color of the points based on the class column.

For example, if you have a dataframe df with a column class, and variables x and y, you can create a scatter plot with points colored by class like this:

import matplotlib.pyplot as plt

# Create a figure and axes

fig, ax = plt.subplots()

# Plot data on the axes, coloring by class

ax.scatter(df['x'], df['y'], c=df['class'].astype('category').cat.codes, cmap='viridis')

# Set the x and y axis labels

ax.set_xlabel('x')

ax.set_ylabel('y')

# Set the title of the plot

ax.set_title('x vs y colored by class')

# Show the plot

plt.show()

✅ Tasks:#

Create a scatter plot of

uvsgcolored by class usingmatplotlib.Create a series of scatter plots of

uvsg,uvsr,uvsi, anduvszin a single figure usingmatplotlib, colored by class.

### your code here

3.2 Using seaborn#

These plots are great, but they are not as visually appealing as we would like. Also, we would additional information about the plots to make them more informative. This is where seaborn comes in. seaborn is built on top of matplotlib and provides a high-level interface for drawing attractive and informative statistical graphics. It simplifies the process of creating complex visualizations.

For example, let’s create a scatter plot of u vs g using seaborn and color the points by class. For a data frame df with columns x, y, and class, you can create a scatter plot like this:

import seaborn as sns

# Create a scatter plot of x vs y colored by class

sns.scatterplot(data=df, x='x', y='y', hue='class')

# Set the title of the plot

plt.title('x vs y colored by class')

# Show the plot

plt.show()

See how easy that was? seaborn takes care of the details for us, such as adding a legend and setting the colors based on the class column.

✅ Tasks:#

Create a scatter plot of

uvsgcolored by class usingseaborn.Create a series of scatter plots of

uvsg,uvsr,uvsi, anduvszin a single figure usingseaborn, colored by class.

### your code here

4. Data analysis#

Great! Now that we’ve learned to import data, to review it for issues, and to visualize it, we can start to do some data analysis. For these tasks, you will need to investigate how to perform the analysis and present the results. You can use the documentation for pandas, matplotlib, and seaborn to help you with this.

You are welcome to use any additional libraries that you like.

Research questions#

What is the distribution of the photometric values (

u,g,r,i,z) for each class? That is, how do the photometric values vary for each class of object? Think histograms, box plots, or violin plots.How do the photometric values (

u,g,r,i,z) linearly correlate with each other for each class? Think scatter plots; correlation matrices; and lines of best fit.What is the distribution of redshift values for each class? Think histograms or box plots.

How do photometric values (

u,g,r,i,z) vary with redshift for each class? Think scatter plots with lines of best fit.

✅ Tasks:#

Work in groups to answer the research questions.

Use the documentation for

pandas,matplotlib, andseabornto help you with the analysis.Document your analysis in the notebook, including any code you used and the results of your analysis.

Take your time, there’s no rush to complete all the tasks. The goal is to learn how to use the tools and to practice data analysis.

### your code here